FOVear 2014

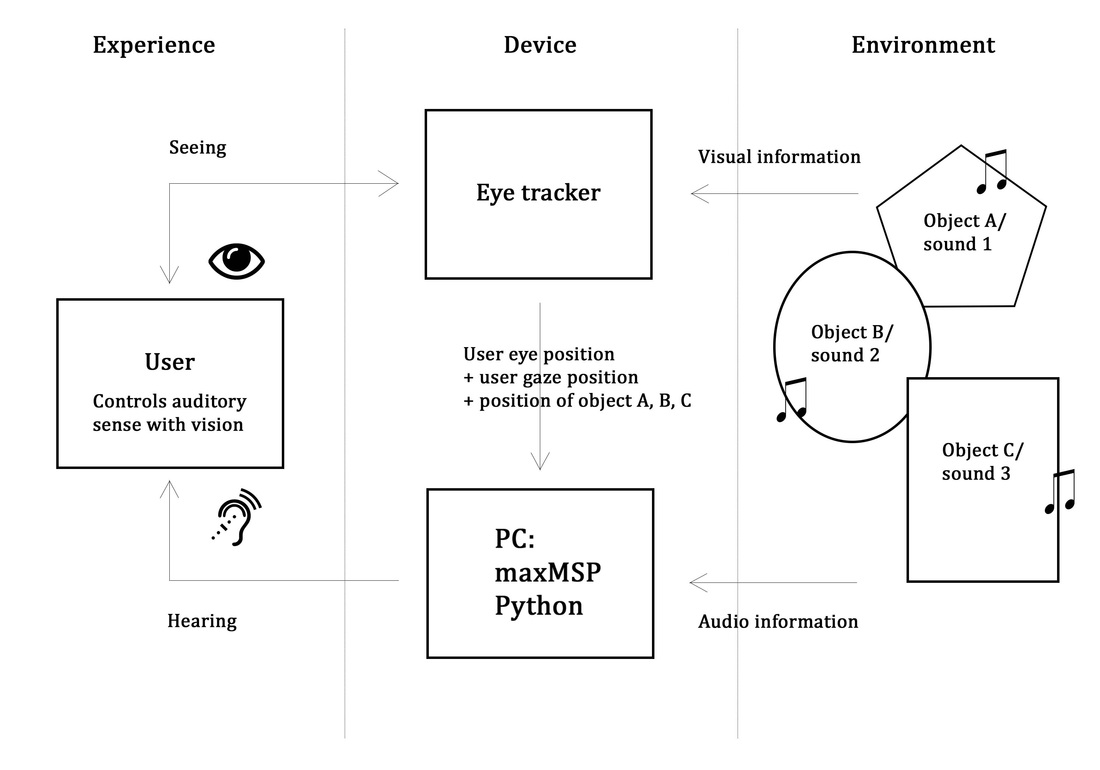

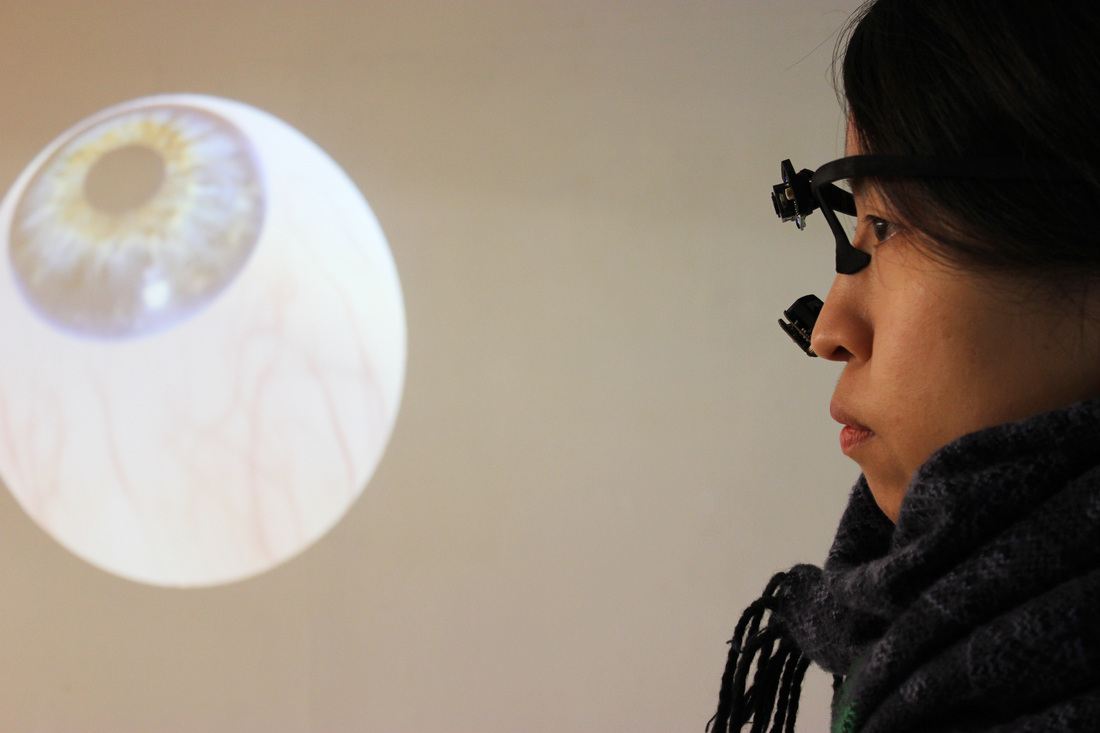

The FOVear is a visual-auditory sensory integration system. It is designed to see what happens when the sensory qualities of vision (active, high foveal acuity, relatively easy selective attention) are transferred to hearing (passive in comparison with selective attention being relatively difficult). The idea of an active and acutely selective hearing modality is fascinating not only in an artistic sense but may also have wider applications in fields such as assistive technology and entertainment. Imagine a classical concert in which the viewer is able to choose which section of the orchestra they hear, or a football game at which they can get statistics on the player in possession of the ball, all by simply applying their attention through looking.

|

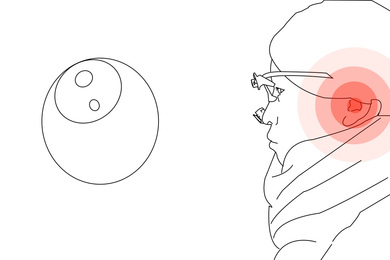

FOVear works by tracking the user’s eye movements with the open-source Pupil eye tracker. Blob recognition software tracks the position of designated objects in the scene and combines this with the user’s gaze position to work out what they are looking at and when they look at it. Different sounds are triggered when the user looks at objects. In essence, the user becomes able to direct their auditory attention, much in the same way as comes naturally with vision. The experience proves to be much more profound than the already well documented cocktail party effect.

|

|

The device could be used effectively in the classroom environment to assist students with attentional disorders. One possibility would be to not only heighten the sounds from the area the user is looking at, but also, via noise-cancelling headphones, to block out all peripheral sounds: giving the user little choice but to focus their attention on the teacher or other designated subject.

|

Huge thanks to the following people:

1.Professor Shinsuke Shimojo, Caltech, for his patience and advice.

2. The excellent Pupil Labs eye tracking project that is used in this project. github link here

3. Dr. Miquel Espi from the Graduate School of Information Science and Technology, The University of Tokyo, for his help with Python

4. Rory McDonnell and Ally Mobbs (Actor Actor x Mobbs) for the use of their song Let It

1.Professor Shinsuke Shimojo, Caltech, for his patience and advice.

2. The excellent Pupil Labs eye tracking project that is used in this project. github link here

3. Dr. Miquel Espi from the Graduate School of Information Science and Technology, The University of Tokyo, for his help with Python

4. Rory McDonnell and Ally Mobbs (Actor Actor x Mobbs) for the use of their song Let It